I’m having an issue lately that I can put a target on. I’m hoping to ask for troubleshooting tips to potentially identify what is the cause of the issue. Running DietPi 6.24.1 (it has happened on a previous 1-2 releases as well I believe) - on a Rock64 board. I run a few things on here so it could be possible an apt package update or a software version update has been the root cause. I’m trying to see what troubleshooting tools are available in this scenario to help identify. Packages running include Node-Red, HomeAssistant, PiHole, and NetData. The way I’m knowing that the issue has happened is through the use of hc.io health checks running against the HomeAssistant web UI.

The scenario goes that once the device comes up - it will run fine for roughly 1-2 days. After this time, it will either reboot or freeze. Reboot will cause hc.io notifications that the service is down, then roughly 5-10 minutes it comes back up. If it lasts longer than this, then it has frozen. What I mean by frozen, is that it no longer responds to any network activity. IP is no longer pinging and I can not access the device via SSH. At this time I don’t have any easy way based on it’s location to access the console or plug in a monitor/keyboard to do more in depth troubleshooting.

What I’m hoping for is perhaps a way to use a logging utility to troubleshooting tool that might be able to dump the current running system logs to a non-volatile storage rather than the usual clearing of log data after a reboot. Any thoughts, ideas, or help you guys can provide? Appreciate any input at this point since this is driving me nuts. Controlling my HA systems with this means that it dies every few days. We rely heavily on our voice assistants working through HA.

1activegeek

First of all check dmesg for any red lines, possibly related to voltage/power issues or disk I/O errors.

To have boot persistent system logs, so you can check last entries before freeze:

dietpi-software > Uninstall DietPi-RAMlog > reboot to apply (required to disable the tmpfs without loosing any existing logs).

Enable boot persistent system logs: mkdir /var/log/journal

Then after a freeze occurred or also just a hang/restart of HA: journalctl

- Scroll to the end to see last entries, or optionally to have reverse order, so see last entries at the top: journalctl -r

Thanks for the input MichaIng - I was perusing dmesg originally and not seeing anything of use. Today though I do notice the below block of log repeating. A quick google didn’t turn up much useful outside of what the purpose of lost+found is.  I will look at setting up the persistent logging so I can try to check what’s going down after it fails. It’s intermittent beyond belief lately. The other day (while I was away none the less) - the thing was rebooting almost every hour through the night. And then again at times it has just gone down and stayed down until I power cycle the PoE switch port. Super aggravating at this point. Thank for the pointers - hope I can catch this in the next day or so again.

I will look at setting up the persistent logging so I can try to check what’s going down after it fails. It’s intermittent beyond belief lately. The other day (while I was away none the less) - the thing was rebooting almost every hour through the night. And then again at times it has just gone down and stayed down until I power cycle the PoE switch port. Super aggravating at this point. Thank for the pointers - hope I can catch this in the next day or so again.

2090.326503] EXT4-fs warning (device zram0): ext4_dirent_csum_verify:353: inode #11: comm find: No space for directory leaf checksum. Please run e2fsck -D.

[ 2090.326519] EXT4-fs error (device zram0): ext4_readdir:189: inode #11: comm find: path /var/log/lost+found: directory fails checksum at offset 4096

[ 2090.327424] EXT4-fs warning (device zram0): ext4_dirent_csum_verify:353: inode #11: comm find: No space for directory leaf checksum. Please run e2fsck -D.

[ 2090.327435] EXT4-fs error (device zram0): ext4_readdir:189: inode #11: comm find: path /var/log/lost+found: directory fails checksum at offset 8192

[ 2090.328472] EXT4-fs warning (device zram0): ext4_dirent_csum_verify:353: inode #11: comm find: No space for directory leaf checksum. Please run e2fsck -D.

[ 2090.328489] EXT4-fs error (device zram0): ext4_readdir:189: inode #11: comm find: path /var/log/lost+found: directory fails checksum at offset 12288

1activegeek

Ah this must be the zRam implementation that comes with ARMbian… Seems to cause issues here.

Please do the following:

G_CONFIG_INJECT 'ENABLED=' 'ENABLED=false' /etc/default/armbian-zram-config

rm /etc/cron.*/armbian*

dietpi-services stop

/DietPi/dietpi/func/dietpi-ramlog 1

for i in /lib/systemd/system/armbian*

do

systemctl disable --now $i

done

umount /var/log

rm -R /var/log

mkdir /var/log

mount /var/log

/DietPi/dietpi/func/dietpi-ramlog 0

rm -R /var/log/lost+found

dietpi-services start

Added a patch for DietPi v6.25 to re-remove ARMbian services (and some related files), as some of them are new, so got installed and enabled with the last linux-root-* package upgrade: https://github.com/MichaIng/DietPi/commit/0e3010e0878c3b46de8513330fcccf6c8c18e7e9

Thank you for this. Possible this was the cause of the reboots? Something in that function or functions that was causing a conflict of sorts at certain points? I would strongly suggest this happened maybe 3-4 revisions ago? I wanted to blame my HA software, not dietPi, but perhaps I should have been more suspicious?

We’ll see how this goes. I will say I also noticed that little extra bit of screen from something about ARMbian on boot too - like a MOTD that was being overwritten by the DietPi one.

Crossing my fingers for now. I also adjusted the lines you gave me to add in the /system to the dir path

for i in /lib/systemd/system/armbian*

location to the systemd files. Thankfully I understood what you were trying to do

Most of the time flaky restarts and unstability is due to power

The output of the adapter or whatever is 5+vdc but thru a cheapo cable the voltage at the device is well below 5vdc…

Well I can try testing a different power source, but right now this is using what should be a pretty solid one. I’m using the Rock64 own PoE adapter. It’s been running solidly for quite some time as well. This only started more recently (say past 2+ months). Iv’e been fighting it for awhile and haven’t had time unfortunately to sit and try to troubleshoot extensively due to work travels.

1activegeek

Ah lol now I recognised the missing “system/” above, indeed good you found it  .

.

I added this cleanup to v6.25 patch as well btw, including the removal of all the services and traces: https://github.com/MichaIng/DietPi/blob/dev/dietpi/patch_file#L2051-L2067

I hope this indeed solves your random reboot/crash issues. What I am interested in is if any APT updates from the ARMbian repo will reinstall those files (most likely) and re-enable the services (hopefully not).

Would be great if you could check back from time to time if this prints some active result: systemctl status armbian*

Ya I checked the git link you posted. I did get it working for now. I’ll try and setup a reminder to check that status info after some apt updates that I run (usually every other week on Sundays).

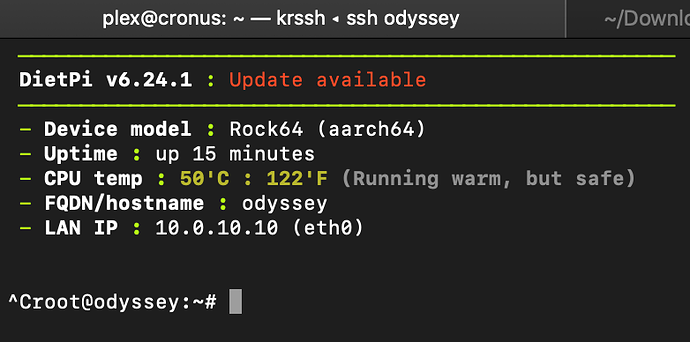

It would seem so far its “working” - but I’m still finding that the device is having some reboots, it just isn’t crashing the system anymore like it used to. What I mean by this is I’m able to login and see that the uptime is not days (since my last crash alert), it is some number of hours or minutes in some instances. I also notice that sometimes when logging in, it sort of gets hung up trying to display the full MOD banner, gets through like 80% of the info and then sits like its running but hasn’t finished. CTRL+C cancels it and I get a prompt, but just odd behavior.

I may in the end decide to wipe it clean again and start anew on a separate SD card. Possibly some comparison on the images. But for now at least it’s better than it was - went away for the whole week and it didn’t seem to crash at all to the point where I had to go power cycling the PoE port for it. Thanks for the help!!

1activegeek

As mentioned above, another way is to disable RAMlog and enable persistent journald log, so you can check last entries before the reboot.

Does it hang on MOTD indeed, so when accessing internet to retrieve the current MOTD (although this should only occur once a day)? This has a timeout of 2 seconds (to not delay the banner too much), so if it didn’t get everything until then, you end up with an empty MOTD until it gets reset on daily cron.

But if it hangs longer on network access there might be some other issue. Not sure if PoE and network access itself can conflict/interfere by times, definitely something to check journalctl/dmesg and persistent logs about in case.

One last other idea, as this caused several different issues, is that the device ran out of entropy. Should not lead to a reboot but to hanging boot/network/services, at least for a while after boot. As we anyway install this on all DietPi systems with v6.25 you might want to give it a shot already: G_AGI haveged

Ah to check if indeed entropy is an issue: dmesg | grep ‘crng init’ should have a few seconds timestamp. If this is lets say 20 seconds or more, then the above entropy daemon can indeed speed up boot and resolve issues of several different kinds: https://github.com/MichaIng/DietPi/issues/2806

Ok so I managed to switch over to persistent logging. In doing so I’m really confused about some of what I’m seeing - though I surmise one is because I wasn’t intended to view the raw log entries in the files in /var/log/journal folders. I tried to, but they’re all gibberish as far as I can see with bits/pieces of journal entries.

What is more confusing though is that I’m not seeing the journal entries relative to the most recent restart. Right now logging in I can see that the device has been up 15 minutes as it reports. When checking the journalctl output, the last log line I’m seeing is 11:59:17 EDT, but current time is 15:13, and yet the device is reporting current date output as 7/4/19 11:52:05.

So there are logs future from current date/time. And additionally in the log output I can see some random lines intermingled that are from Jul 2 right in between lines from Jul 4.

Also I snagged a screenshot of what I mean with the MOD - doesn’t show you much other than where the hangup is - and I’ve not let it sit long enough to see how long it will last, but its definitely more than like 30 seconds that I’ve waited before.

So a bunch of oddities and the date problem leads me to believe there is potentially an on-board problem since it can’t seem to keep time anymore.

root@odyssey:/mnt/dietpi_userdata# date

Thu 4 Jul 11:52:05 EDT 2019

root@odyssey:/mnt/dietpi_userdata# journalctl

-- Logs begin at Thu 2019-07-04 11:17:01 EDT, end at Thu 2019-07-04 11:59:17 EDT. --

1activegeek

Sorry for the late reply.

Most probably some time sync update that caused the future timestamps. However anther reboot should solve, or simply ignore  .

.

What you describe with the hanging MOTD pretty much sounds like an entropy issue or one with IPv6.

So I would try to disable IPv6 (dietpi-config > Network Options: Adapters) and install an entropy daemon as mentioned above. Btw better than haveged on RPi is:

apt install rng-tools

This is an alternative that consumes less RAM but does not work on all machines. But on RPi it works and is default on fresh Raspbian as well.

MichaIng

Sorry to ping you on an old thread, but I wanted to circle back to highlight something in DietPi that must be either incompatible or problematic with the Rock64 board I have. A few things of note to highlight my rationale:

- After the issues described here which were persistent over and over, fresh new images, different SD cards, etc - always the same issue. After running at most a day, it would continually have random reboots and some would cause the system to hang. This never went away and I was forced to move the workload I had off of the device.

- I’ve now come back to trying to play around again and found the same issue. This time I tried using the Rock64 default images for Ubuntu, and it has now been up and running for almost 5 days straight - same hardware, same SD card, and same network/power supply as I was running when I just had DietPi in it and crashing.

- I don’t know how to identify if I have a V2 or V3, but the Rock64 site does indicate that the Rock64 is only compatible with DietPi for Ver 2 ONLY. I’m wondering if I have a Ver 3, and there is something in your image that is incompatible with the newer board.

- My guess is that something may have changed in your code around the timeframe of my initial post here. Prior to my posts noticing that the device was having the reboot/freeze issue, I was running DietPi religiously without issue for quite some time.

All this to say, I’d love to try and help you isolate or find out what could be wrong - but I’m not sure where to start to easily help with this. I’m not a coder so I’m not likely going to be solid at digging through the repo to find what major changes happened that could be indicative of the version that made the shift, but knowing I can successfully run an Ubuntu base image with no issue, tells me something in the image must be the cause. I’d rather run DietPi as I like your setup, menus, custom scripts, and slimmed image - but I can’t sacrifice features for stability.

As a followup, and this would lead me to believe possibly the Rock64 documentation is wrong - I believe I have a Ver2, as I now see on the board actually it says “Rock64_VER2.0” printed. That said, I do notice in the diagrams on the wiki, there main difference I believe at a quick glance - is V3 has a RTC built into the board. Wondering if its possible that something was changed around the clocking to address the Ver 3.0 boards? And/or if something around time was the cause of the original issue.